Archive for the 'Film theory' Category

Movies by the numbers

Everything Everywhere All at Once (2022). Publicity still.

DB here:

Cinema, Truffaut pointed out, shows us beautiful people who always find the perfect parking space. Mainstream movies cater to us through their stories and subjects, protagonists and plots. But they have also been engineered for smooth pickup. Their use of film technique is calculated to guide us through the action and shape our emotional response to it.

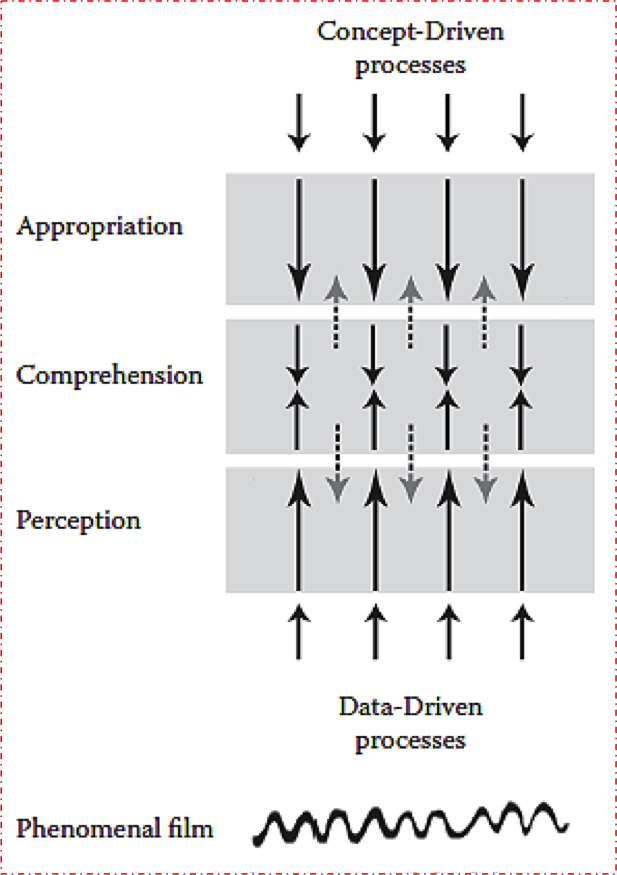

How this engineering works has fascinated film psychologists for decades. Over a century ago, Hugo Münsterberg proposed that the emerging techniques of the 1915 feature film made manifest the workings of the human mind. In ordinary life, we make sense of our surroundings by voluntarily shifting our attention, often in scattershot ways. But the filmmaker, through movement, editing, and close framings, creates a concentrated flow of information designed precisely for our pickup. Even memory and imagination, Münsterberg argued, find their cinematic correlatives in flashbacks and dream sequences. In cinema, the outer world has lost its weight and “has been clothed in the forms of our own consciousness.”

Over the decades, many psychologists have considered how the film medium has fitted itself to our perceptual and cognitive capacities. Julian Hochberg studied how the flow of shots creates expectations that guide our understanding of cinematic space. Under the influence of J. J. Gibson’s ecological theory of perception, Joseph Anderson reviewed the research that supported the idea that films feed on both strengths and shortcomings of the sensory systems we’ve evolved to act in the environment. In more recent years, Jeff Zacks, Joe Magliano, and other visual researchers, have gone on to show how particular techniques exploit perceptual shortcuts (as in Dan Levin’s work on change blindness and continuity editing errors https://vimeo.com/81039224). Outside the psychologists’ community, writers on film aesthetics have fielded similar arguments. An influential example is Noël Carroll’s essay “The Power of Movies.”

Over the decades, many psychologists have considered how the film medium has fitted itself to our perceptual and cognitive capacities. Julian Hochberg studied how the flow of shots creates expectations that guide our understanding of cinematic space. Under the influence of J. J. Gibson’s ecological theory of perception, Joseph Anderson reviewed the research that supported the idea that films feed on both strengths and shortcomings of the sensory systems we’ve evolved to act in the environment. In more recent years, Jeff Zacks, Joe Magliano, and other visual researchers, have gone on to show how particular techniques exploit perceptual shortcuts (as in Dan Levin’s work on change blindness and continuity editing errors https://vimeo.com/81039224). Outside the psychologists’ community, writers on film aesthetics have fielded similar arguments. An influential example is Noël Carroll’s essay “The Power of Movies.”

James Cutting’s Movies on Our Minds: The Evolution of Cinematic Engagement, itself the fruit of many years of intensive studies, builds on these achievements while taking wholly original perspectives as well. Comprehensive and detailed, it is simply the most complete and challenging psychological account of film art yet offered. I can’t do justice to its range and nuance here. Consider what follows as an invitation to you to read this bold book.

Laws of large numbers

The Martian (2015). Publicity still.

Cutting’s initial question is “Why are popular movies so engaging?” He characterizes this engagement—a more gripping sort than we experience with encountering plays or novels—as involving four conditions: sustained attention, narrative understanding, emotional commitment, and “presence,” a sense that we are on the scene in the story’s realm. Different areas of psychology can offer descriptions and explanations of what’s going on in each of these dimensions of engagement.

His prototype of popular cinema is the Hollywood feature film—a reasonable choice, given its massive success around the world. The period he considers runs chiefly from 1920 to 2020. He has run experimental studies with viewers, but the bulk of his work consists of scrutinizing a large body of films. Depending on the question he’s posing, he employs samples of various sizes, the largest including up to 295 movies, the smallest about two dozen. Although he insists he’s not concerned with artistic value, most are films that achieved some recognition as worthwhile. He and his research team have coded the films in their sample according to the categories he’s constructed, and sometimes that process has entailed coding every frame of a movie.

Like most researchers in this tradition, Cutting picks out devices of style and narrative and seeks to show how each one works in relation to our mind. His list is far broader than that offered by most of his predecessors; he considers virtually every film technique noted by critics. (I think he pins down most of those we survey in Film Art.) In some cases he has refined standard categories, such as suggesting varieties of reaction shot.

Starting with properties of the image (tonality, lens adjustments, mise-en-scene, framing, and scale of projection), he moves to editing strategies, the soundtrack, and then to matters of narrative construction. For each one he marshals statistical evidence of the dominant usage we find in popular films. For example, contemporary films average about 1.3 people onscreen at all times, while in the 1940s and 1950s, that average was about 2.5. Across his entire sample, almost two-thirds of all shots show conversations—the backbone of cinematic narrative. (So much for critics who complain that modern movies are overbusy with physical action.) And people are central: 90% of all shots show the head of one or more character.

Some of these findings might appear to be simply confirming what we know intuitively. But Big Data reveal patterns that neither filmmakers nor audiences have acknowledged. Granted, we’ve all assumed that reaction shots are important in cueing the audience how to respond. Cutting argues that despite their comparative rarity (about 15% of a film’s total) they are central to our overall experience: they are “popular movies’ most important narrational device.” They invite the audience to engage with the character’s emotions, and they encourage us to predict what will happen next.

This last role emerges in what Cutting calls the “cryptic reaction shot,” in which the response is ambivalent. Such shots show a moment when a character doesn’t speak in a conversation (for example, Jim’s reactions in my Mission: Impossible sequence). Filmmakers seem to have learned that

these shots are an excellent way to hook the viewer into guessing what the character is thinking—what the character might have said and didn’t. They also serve as fodder for predicting what the character will do next (174-175).

Cryptic reactions gather special power at the scene’s end. Whereas films from the 1940s and 1950s ended their scenes with such shots about 20% of the time, today’s films tend to end conversations with them almost two-thirds of the time. Since reading Cutting’s book I’ve noticed how common this scene-ending reaction shot is in movies (TV shows too). It’s a storytelling tactic that nobody, as far as I know, had previously spotted.

Similarly, Cutting tests Kristin’s model of feature films’ prototypical four-part structure. He finds it mostly valid, both in terms of data clustering (movement, shot lengths, etc.) and viewers’ intuitions about segmentation. But who would have expected what he found about what screenwriters call the “darkest moment”? Using measures of luminance, he finds that “this point literally is, on average, the darkest part of a movie segment” (285).

Cutting has found resourceful ways to turn factors we might think of as purely qualitative into parameters that can be counted and compared. To gauge narrative complexity, he enumerates the number of flashbacks, the amount of embedding (stories within stories), and particularly the number of “narrational shifts” in films. Again, there is a change across history.

Movies jump around among locations, characters, and time frames considerably more often than they used to. . . . Scenes and subscenes have gotten shorter. In 1940, they averaged about a minute and a half in duration, but by 2010 they were only about 30 seconds long (271-272).

His illustrative example is the climax of The Martian, where in four minutes and 35 shifts, the narration cuts together seven locations, all with different characters. Something similar goes on in the motorcycle chase of Mission: Impossible II and the final seventy minutes of Inception.

These examples show that Cutting is going far beyond simply tagging regularities in an atomistic fashion. Throughout, he is proposing that these patterns perform functions. For the filmmakers, they are efforts to achieve immediate and particular story effects, highlighting this or that piece of information. Showing few people in a shot help us concentrate on the most important ones. Conversations are the most efficient way to present goals, conflicts, and character relationships. Editing among several lines of action at a climax builds suspense. He suggests that because viewer mood is correlated with luminance, the darkest moment is triggering stronger emotional commitment.

But Cutting sees broader functions at work underneath filmmakers’ local intentions. Movies’ preferred techniques, the most common items on the menu, smoothly fit our predispositions—our tendencies to look at certain things and not others, to respond empathically to human action, to fit plots together coherently. Filmmakers have intuitively converged on powerful ways to make mainstream movies fit humans’ “ecological niche.”

And those movies have, across a century, found ways to snuggle into that niche ever more firmly. As we have learned the skills of following movie stories, filmmakers have pressed us to go further, stretching our sensory capacities, demanding faster and subtler pickup of information. Cutting’s numbers lead us to a conception of film history, the “evolution of cinematic engagement” promised in his subtitle.

Film history without names

Suspense (Weber and Smalley, 1913).

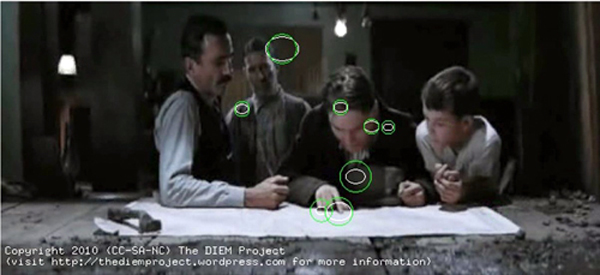

Central to Cutting’s psychological tradition is the idea that engagement depends minimally on controlling and sustaining attention. The techniques itemized almost invariably function to guide the viewer to see (or hear) the most important information. Filmmakers discovered that you can intensify attention by cooperation among the cues. Given that humans, especially faces, carry high information in a scene, you can use lighting, centered position, frontal views, close framings, figure movement, and other features of a shot to reinforce the central role of the humans that propel the narrative. When the key information doesn’t involve faces or gestures, you can give objects the same starring role.

Movies on Our Minds is very thorough in showing through statistical evidence that all these techniques and more combine to facilitate our attention. As an effort of will you can focus your attention on a lampshade, but it won’t yield much. The line of least resistance is to go with what all the cues are driving you to. But the statistical evidence also points to changes across history. What’s going on here?

Most basically, speedup. Cutting asked undergraduate students to go through films twice, once for basic enjoyment and then frame by frame, recording the frame number at the beginning and ending of each shot. He found that by and large the students enjoyed the older films, but some complained that these older movies were slower than what they ordinarily watched.

This impression accords with both folk wisdom and film research. Most viewers today note that movies feel very fast-moving, compared with older films. There’s also a considerable body of research indicating that cutting rates have accelerated since at least the 1960s. More loosely, I think most people think that story information is given more swiftly in modern movies; our films feel less redundant than older ones. Exposition can be very clipped. Abrupt changes from scene to scene are accentuated by the absence of “lingering” punctuation by fade-outs or dissolves. Indeed, one scene is scarcely over before we hear dialogue or sound effects from the next one. Characters’ motives aren’t always spelled out in dialogue but are evoked by enigmatic images or evocative sound. The Bourne Identity attracted notice not just for its fragmentary editing but also for its blink-and-you’ll-miss-them “threats on the horizon”—virtually glimpses of what earlier films would have dwelled on more. From this angle, Everything Everywhere All at Once represents a kind of cinema that grandpa, and maybe dad, would find hard to follow. (Actually, I’m told that some audiences today have trouble too.)

In The Way Hollywood Tells It, I suggested some causal factors shaping speedup, including various effects of television. Cutting grants that these may be in play, but just as he looks for an underlying pattern of functional factors in his ecology of the spectator, he posits a broad process of cinematic evolution similar to that in biology.

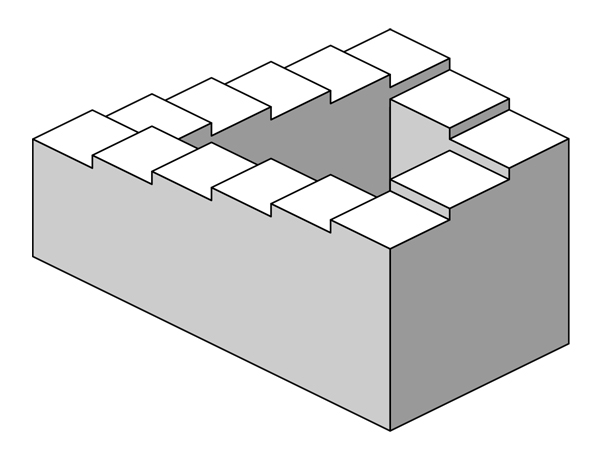

Look at film history simply as succession of movies. From a welter of competing alternatives, some techniques are selected and prove robust. They are replicated, modified in relation to the changing milieu. Others fail. For instance, the split-screen telephone shot of early cinema, as in the above frame from Suspense, became “a failed mutation” when shot/ reverse-shot editing for phone calls became dominant. As the main line of descent has strengthened, variation has come down to “selected tweaks” like eyelights and Steadicam movement.

Within this framework, filmmakers and audiences participate in a give-and-take.Through trial and error, early filmmakers collectively found ways to make films mesh with our perceptual proclivities. As viewers became more skilled in following a movie’s lead, there was pressure on filmmakers to go further and make more demands. If attention could be maintained, then shots could be shorter, scenes could move faster, redundancy could be cut back, complexity could be increased.

Viewers responded positively, embracing the new challenges of quicker pickup. As viewers became more adept, filmmakers could push ahead boldly, toward films like Memento and Primer. The most successful films, often financially rewarding ones like The Martian and Inception, suggest that filmmakers have continued to fit their boundary-pushing impulses to popular abilities.

Which means that audiences have adapted to these demands. But this isn’t adaptation in the strong Darwinian sense. With respect to his students’ reactions, Cutting writes:

I think . . . that the eye of a 20-year-old in the 2010s was faster at picking up visual information than the eye of a 20-year-old in the 1940s. This is not biological evolution. This is cultural education, however incidental it might be.

This process of cultural education, he suggests can be understood using Michael Baxandall’s concept of the “period eye.” Baxandall studied Italian fifteenth-century painting and posited that an adult in that era saw (in some sense) differently than we do today. Granted, people have a common set of perceptual mechanisms, but cultural differences intervene. Baxandall traced some socially-grounded skills that spectators could apply to paintings. In parallel fashion, given the rapidity of cultural change in the twentieth century, young people now may have gained an informal visual education, a training of the modern eye in the conventions of media.

Cutting insists that he isn’t suggesting that our attention spans have recently decreased, as many maintain. Instead, the idea of a period eye centers on

the growth and improvement of visual strategies as shaped by culture. If this idea is correct, then contemporary undergraduates have reason to complain about movies from the 1940s and 1950s that I asked them to watch.

By this account, film teachers have to recognize that their students, for whom any film before 2000 is an old movie, are possessed of a constantly changing “period eye.”

Failed mutations, or revision and revival?

City of Sadness (Hou, 1989).

I do have some minor reservations, which I’ll introduce briefly.

First, I don’t think that Baxandall’s idea of a period eye is a good fit for the dynamic that Cutting has identified. Baxandall’s concern is with a narrow sector of the fifteenth-century public: “the cultivated beholder” whom “the painter catered for.”

One is talking not about all fifteenth-century people, but about those whose response to works of art was important to the artist—the patronizing class, one might say. In effect this means a rather small proportion of the population: mercantile and professional men, members of confraternities or as individuals, princes and their courtiers, the senior members of religious houses. The peasants and the urban poor play a very small part in the Renaissance culture that most interests us now.

Ordinary viewers could recognize Jesus and Mary, the Annunciation or the Crucifixion, but for Baxandall the “period eye” involved a specific skill set. This was derived from such domains as business, surveying, ready reckoning, and a widespread artistic concepts like “foreshortening” or “stylistic ease.” The study of geometry prepared the perceiver to appreciate the virtuosity of perspective or proportion, but the untutored viewer could only marvel at the naturalness of the illusion.

It seems to me that Cutting’s 20-year-olds are not prepared perceivers in Baxandall’s sense. True, they may be alert to faulty CGI or a lame joke, but the whole point of focusing on mass-entertainment movies is that they engage multitudes, not coteries. The techniques Cutting explores are immediately grasped by nearly everybody, and no esoteric skills are necessary to feel their impact. Baxandall’s viewer is able to appreciate a painter’s rendition of bulk because he’s used to estimating a barrel’s capacity, but all Cutting’s viewer needs to get everything is just to pay attention.

Because the skills of following modern movies are so widespread, I wonder if Cutting’s case better fits the explorations of Heinrich Wöfflin, who flirted with the idea that “seeing as such has its own history, and uncovering these ‘optical strata’ has to be considered the most elementary task of art history.” Throughout his late career Wöfflin struggled to make the “history of vision” thesis intelligible. At times he implied that everyone in a given era “saw” in a way different from people in other eras. At other times he proposed that of course everyone sees the same thing but “imagination” or “the spirit” of the period and place shape how we understand what we see. This is murky water, and you can sense my skepticism about it. I don’t see how we could give the “period eye” for cinema much oomph on this front, but I think we might salvage Cutting’s central point. See below.

Secondly, by concentrating on mass-market cinema, “the movies,” and suggesting they fit our evolved capacities, we can easily overlook the alternatives. Obvious examples are the earliest cinema, which did involve some precise perceptual appeals (centering, frontality, selective lighting, emphasis through foreground/background relations) but not all the ones associated with editing. Given that tableau cinema had a longish ride (1895-1920 or so), it’s not inconceivable that, had circumstances been different, we would still have a cinema of distant framings and static long takes.

Cutting is aware of this. Rewind the tape of history, he says, and eliminate factors like World War I, and things could have turned out differently. But where would a long-running tableau cinema leave the story of cinema’s dynamic of natural selection? Would we have simply a steady state, without the acceleration of the last sixty years? Or would we look within the long history of tableau cinema for mutations and failed adaptations?

We have contemporary examples, in what has come to be called “slow cinema.” Hou Hsiao-hsien, Theo Angelopoulos, and other modern filmmakers have exploited the static long take for particular aesthetic effects. In another universe, they are the “movies” that hordes flock to see. Tableau cinema seems to me not a failed mutation, replaced by a style that more tightly meshed with spectators’ proclivities; it was an aesthetic resource that could be revived for new purposes. On a smaller scale, something like this happened with split-screen phone calls (as in Down with Love and The Shallows).

As the other arts show us, the past is available for re-use in fresh ways. Maybe nothing really goes away.

Which brings me to my last point. Baxandall’s emphasis on skills reminds us that we can acquire a new “eye” for appreciating films outside the mainstream. Cutting’s 20-somethings can learn to appreciate and even enjoy films that might strike them as slow. We call this the education of taste.

My inclination is to see the contemporary embrace of speedup as based in filmmakers’ and viewers’ more or less unthinking choices about taste. Indeed, Baxandall suggests that the period eye is a matter of “cognitive style,” a set of skills one person may have and another may lack.

There is a distinction to be made between the general run of visual skills and a preferred class of skills specially relevant to the perception of works of art. The skills we are most aware of are not the ones we have absorbed like everyone else in infancy, but those we have learned formally, with conscious effort: those which we have been taught.

Once the skills have been taught, we can exercise them for pleasure.

If a painting gives us opportunity for exercising a valued skill and rewards our virtuosity with a sense of worthwhile insight about that painting’s organization, we tend to enjoy it: it is to our taste.

I’d suggest that we have “overlearned” the skills solicited by mainstream movies and made them the automatic default for our taste. But we can also learn the skills for appreciating 1910s tableau cinema or City of Sadness. As we do so, we find we have cultivated a new dimension of our tastes. From this standpoint, the sensitive appreciators of slow cinema are far more like Baxandall’s educated Renaissance beholders than are the Hollywood mass audience. Perhaps to enjoy Feuillade or Hou today is to possess a genuine “period eye.”

None of this undercuts Cutting’s findings. But given the variety of options available, I’d argue that what the history of cinema reveals, among many other things, is a history of styles—some of which are facile to pick up, for reasons Cutting and his tradition indicate, and others which require effort and training and an open mind. Not clear-cut evolution, then, but just the blooming plurality we find in all the arts, with some styles becoming dominant and normative and others becoming rarefied . . . until artists start to make them mainstream. And the whole ensemble can be mixed and remixed in unpredictable ways.

There’s far too much in Movies on Our Minds to summarize here. It’s a feast of ideas and information, presented in lively prose. (The studies that the book rests upon are far more dependent upon statistics and graphs.) I should disclose that I read the book in manuscript and wrote a blurb for it. Consider this entry, then, as born of a strong admiration for James’s project.

Cutting’s book rests on years of intensive studies. Go to his website for a complete list. Most recently, he has traced a rich array of phone-call regularities across a hundred years. He has been a recurring player on this blog, notably here and here. James and I spent a lively week watching 1910s films at the Library of Congress, with results recorded here and here. The last link also provides more examples of revived “defunct” devices.

The tradition of film-psychological research I rehearse includes Hugo Münsterberg, The Film: A Psychological Study in Hugo Munsterberg on Film: The Photoplay: A Psychological Study and Other Writings, ed. Allan Langdale (Routledge, 2001; orig. 1916); In the Mind’s Eye: Julian Hochberg on the Perception of Pictures, Films, and the World, ed. Mary A. Peterson, Barbara Gillam, H. A. Sedgwick (New York: Oxford, 2007); Joseph D. Anderson, The Reality of Illusion: An Ecological Approach to Cognitive Film Theory (Southern Illinois University Press, 1996); Jeffrey Zacks, Flicker: Your Brain on Movies (Oxford, 2014). Noël Carroll’s essay “The Power of Movies” is included in his collection Theorizing the Moving Image (Cambridge, 1996).

Michael Baxandall’s most celebrated work is Painting and Experience in Fifteenth-Century Italy (Oxford, 1972); citations come from pages 29-29. My mention of Wölfflin relies on Principles of Art History: The Problem of the Early Development of Style in Early Modern Art, trans. Jonathan Blower (Getty Research Institute, 2015). My citation comes from p. 93.

One reference point for Cutting’s work is a book Kristin and I wrote with Janet Staiger, The Classical Hollywood Cinema, discussed here. See also Kristin’s Storytelling in the New Hollywood, which elaborates the argument for the four-part model, and my books On the History of Film Style and The Way Hollywood Tells It, along with the web video “How Motion Pictures Became the Movies.”

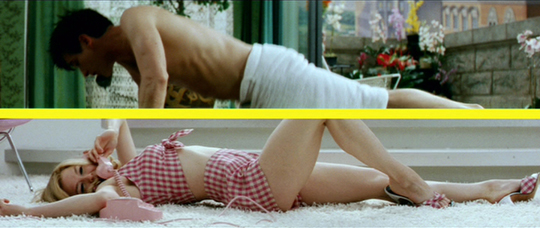

Bye Bye Birdie (1963).

Learning to watch a film, while watching a film

The Hand That Rocks the Cradle (1992).

DB here:

“Every film trains its spectator,” I wrote a long time ago. In other words: A movie teaches us how to watch it.

But how can we give that idea some heft? How do movies do it? And what are we doing?

Many menus

Tinker, Tailor, Soldier, Spy (2011).

In my research, I’ve found the idea of norms a useful guide to understanding how filmmakers work and how we follow stories on the screen. A norm isn’t a law or even a rule; it’s, as they say in Pirates of the Caribbean, more of a guideline. But it’s a pretty strong guideline. Norms exert pressure on filmmakers, and they steer viewers in specific directions.

Genre conventions offer a good example. The norms of the espionage film include certain sorts of characters (secret agents, helpers, traitors, moles, master minds, innocent bystanders) and situations (tailing targets, pursuits, betrayal, codebreaking, and the like). The genre also has some characteristic storytelling methods, like titles specifying time and place, or POV shots through binoculars and gunsights.

But there are other sorts of norms than genre-driven ones. There are broader narrative norms, like Hollywood’s “three-act” (actually four-part) plot structure, or the ticking-clock climax (as common in romcoms and family dramas as in action films). There are also stylistic norms, such as the shoulder-level camera height and classic continuity editing, the strategy of carving a scene into shots that match eyelines, movements, and other visual information.

Thinking along these lines leads you to some realizations. First, any film will instantiate many types of norms (genre, narrative, stylistic, et al.). Second, norms are likely to vary across history and filmmaking cultures. The norms of Hollywood are not the same as the norms of American Structural Film. There are interesting questions to be asked about how widespread certain norms are, and how they vary in different contexts.

Third, some norms are quite rigid, as in sonnet form or in the commercial breaks mandated by network TV series. Other norms are flexible and roomy (as guidelines tend to be). There are plenty of mismatched over-the-shoulder cuts in most movies we see, and nobody but me seems bothered.

More broadly, norms exist as options within a range of more or less acceptable alternatives. Norms form something of a menu. In the spy film, the woman who helps the hero might be trustworthy, or not. The apparent master mind could turn out to be taking orders from somebody higher up, perhaps somebody supposedly on your side. One scene might avoid continuity editing and instead be presented in a single long take.

Norms provide alternatives, but they weight them. Certain options are more likely to be chosen than others; they are defaults. (Facing a menu: “The chicken soup is always safe.”) An action film might present a fight or a chase in a single take (Widows, Atomic Blonde), but it would be unusual if every scene in the film were played out this way. Not forbidden, but rare. Avoiding the default option makes the alternative stand out as a vivid, willed choice.

Very often, critics take most of the norms involved for granted and focus on the unusual choices that the filmmakers have made. One of our most popular entries, the entry on Tinker, Tailor, Soldier, Spy, discusses how the film creates a demanding espionage movie by its manipulations of story order, characterization, character parallels, and viewpoint. It isn’t a radically “abnormal” film, but it treats the genre norms in fresh ways that challenge the viewer.

Because, after all, viewers have some sense of norms too. Norms are part of the tacit contract that binds the audience to creators. And the viewer, like the critic, looks out for new wrinkles and revisions or rejections of the norm–in other words, originality.

Picking from the menu

Norms of genre, narrative, and style are shared among many films in a tradition or at a certain moment. We can think of them as “extrinsic norms,” the more or less bounded menu of options available to any filmmaker. By knowing the relevant extrinsic norms, we’re able to begin letting the movie teach us how to watch it.

The process starts early. Publicity, critical commentary, streaming recommendations, and other institutional factors point up genre norms, and sometimes frame the film in additional ways–as an entry in a current social controversy (In the Heights, The Underground Railroad), or as the work of an auteur. You probably already have some expectations about Wes Anderson’s The French Dispatch.

Then, as we get into the film, norms quickly click into place. The film signals its commitment to genre conventions, plot patterning, and style. At this point, the film’s “teaching” consists largely of just activating what we already know. To learn anything, you have to know a lot already. If the movie begins with a character recalling the past, we immediately understand that the relevant extrinsic norm isn’t at that point a 1-2-3 progression of story events, but rather the more uncommon norm of flashback construction, which rearranges chronology for purposes of mystery or suspense. Within that flashback, though, it’s likely that the 1-2-3 default will operate.

As the film goes on, it continues to signal its commitment to extrinsic norms. An action scene might be accompanied by a thunderous musical score, or it might not; either way, we can roll with the result. The characters might let us into their thinking, through voice-over or dream sequences, or we might, as in Tinker, Tailor, be confronted with an unusually opaque protagonist whose motives are cloudy. The extrinsic norms get, so to speak, narrowed and specified by the moment-by-moment working out of the film. Items from the menu are picked for this particular meal.

That process creates what we can call “intrinsic norms,” the emerging guidelines for the film’s design. In most cases, the film’s intrinsic norms will be replications or mild revisions of extrinsic ones. For all its distinctiveness, in most respects Tinker, Tailor adheres to the conventions of the spy story. And as we get accustomed to the film’s norms, we focus more on the unfolding action. We’ve become expert film watchers. We learn quickly, and our “overlearned” skills of comprehension allow us to ignore the norms and, as we stay, get into the story.

Narration, the patterned flow of story information, is crucial to this quick pickup. Even if the film’s world is new to us, the narration helps us to adjust through its own intrinsic norms. The primary default would seem to be “moving spotlight” narration. Here a “limited omniscience” attaches us to one character, then another, within a scene or from scene to scene. We come to expect some (not total) access to what every character is up to.

In Curtis Hanson’s Hand That Rocks the Cradle, we’re initially attached to the pregnant Claire Bartel, who has moved to Seattle with her husband Michael and daughter Emma. When Claire is molested by her gynecologist Dr. Mott, she reports him. The scandal drives him to suicide, and his distraught wife miscarries. She vows vengeance on Claire. Thereafter, the plot shuttles us among the activities of Claire, Mrs. Mott, Michael, the household handyman Solomon, and family friends like Michael’s former girlfriend Marlene. The result is a typical “hierarchy of knowledge”–here, with Claire usually at the bottom and Mrs. Mott near the top. We don’t know everything (characters still harbor secrets, and the narration has some of its own), but we typically know more about motives, plans, and ongoing action than any one character does.

More rarely, instead of a moving spotlight, the film may limit us to only one character’s range of knowledge. Again, scene after scene will reiterate the “lesson” of this singular narrational norm. That repetition will make variations in the norm stand out more strongly. Hitchcock’s North by Northwest is almost completely restricted to Roger Thornhill, but it “doses” that attachment with brief asides giving us key information he doesn’t have. Rear Window and The Wrong Man, largely confined to a single character’s experience, do something similar at crucial points.

Sometimes, however, a film’s opening boldly announces that it has an unusual intrinsic norm. Thanks to framing, cutting, performance, and sound, nearly all of Bresson’s A Man Escaped rigorously restricts us to the experience of one political prisoner. We don’t get access to the jailers planning his fate, or to men in other cells–except when he communicates with them or participates in communal activities, like washing up or emptying slop buckets.

The apparent exception: The film’s opening announces its intrinsic norm in an almost abstract way. First, we get firm restriction. There are fairly standard cues for Fontaine’s effort to escape from the police car that’s carrying him. Through his optical POV, we see him grab his chance when the driver stops for a passing tram.

The film’s title and the initial situation let us lock onto one extrinsic norm of the prison genre: the protagonist will try to escape. Knowing that we know this, Bresson can risk a remarkable revision of a stylistic norm.

Fontaine bolts, but Bresson’s visual narration doesn’t follow him. The camera stays stubbornly in the car with the other prisoner while Fontaine’s aborted escape is “dedramatized,” barely visible in the background and shoved to the far right frame edge. He is run down and brought back to be handcuffed and beaten.

The shot announces the premise of spatial confinement that will dominate the rest of the film. The narration “knows” Fontaine can’t escape and waits patiently for him to be dragged back. In effect, the idea of “restricted narration” has been decoupled from the character we’ll be restricted to. This is the film’s first, most unpredictable lesson in stylistic claustrophobia.

Got a light?

Most intrinsic norms aren’t laid out as boldly as the opening of A Man Escaped, but ingenious filmmakers may provide some variants. Take a fairly conventional piece of action in a suspense movie. A miscreant needs to plant evidence that incriminates some innocent soul.

In Strangers on a Train, that evidence is a cigarette lighter. Tennis star Guy Haines shares a meal with pampered sociopath Bruno Antony, whose tie sports colorful lobsters. Bruno steals Guy’s distinctive cigarette lighter.

Bruno has proposed that they exchange murders: He will kill Guy’s wife Miriam, who’s resisting divorce, and Guy will kill Bruno’s father. Bruno cheerfully strangles Miriam at a carnival, aided by the lighter.

When Guy doesn’t go through with his side of the deal, Bruno resolves to return to the scene of Miriam’s death and leave the lighter to incriminate Guy. The film’s climax consists of the two men fighting on a merry-go-round gone berserk. Although Bruno dies asserting Guy’s guilt, the lighter is revealed in his hand. Guy is exonerated.

Once the lighter is introduced in the early scenes, it comes to dominate the last stretch of the film. In scene after scene, Hitchcock emphasizes Bruno’s possession of it. Sometimes it’s only mentioned in dialogue, but often we get a close-up of it as Bruno looks at it thoughtfully–here, brazenly, while Guy’s girlfriend Ann is calling on him.

When Bruno picks up a cheroot or a cigarette, we expect to see the lighter.

One of the film’s most famous set-pieces involves Bruno straining to retrieve the lighter after it has fallen through a sidewalk grating.

Bruno has dropped it before, during Miriam’s murder, but then he notices and retrieves it. It’s as if this error has shown him how he might frame Guy if necessary. The image of the lighter in the grass previews for us what he plans to do with it later.

What does the lighter have to do with norms? Most obviously, Strangers on a Train teaches us to watch for its significance as a plot element. It’s not only a potential threat, but also Bruno’s intimate bond to Guy, as if Bruno has replaced Ann, who gave Guy the lighter. The film also invokes a normalized pattern of action–a character has an object he has stolen and will plant to make trouble–and treats it in a repeated pattern of visual narration. The character looks at the object; cut to the object; cut back to the character in possession of the object, waiting to use it at the right moment. Our ongoing understanding of the lighter depends on the norm-driven presentation of it.

Once we’re fully trained, Hitchcock no longer needs to show us the lighter at all. En route to the carnival to plant the lighter, Bruno lights a cigarette with the lighter, although his hands conceal it. But then the train passenger beside him asks for a light.

In order to hide the lighter, Bruno laboriously pockets it and fetches out a book of matches.

If we saw only this scene, we might not have realized what’s going on, but it comes long after the narrational norm has been established. We can fill out the pattern and make the right inference. Bruno wants no witness to see this lighter.

The hand that cradles the rock

In The Hand That Rocks the Cradle, under the name Peyton Flanders, Mrs. Mott becomes nanny to Claire’s daughter and infant son. Pretending to be a friendly helper, she subverts Claire’s daily routines and her trusting relationship with Michael. As in most domestic thrillers, the accoutrements of upper-middle-class lifestyle–a baby monitor, a Fed Ex parcel, expensive cigarette lighters, asthma inhalers, wind chimes–get swept up in the suspense. Peyton weaponizes these conveniences, and through a somewhat unusual narrational norm the film trains us to give her almost magical powers.

We get Peyton’s early days in household filtered through her point of view. Classic POV cutting is activated during her job interview. She notices that Claire’s pin-like earring drops off and she hands it back to her.

Attachment to Peyton gets more intense when she sees the baby monitor and then fixates on the baby.

We’re then initiated into her tactics, and to the film’s way of presenting them. Serving supper, Claire doesn’t notice that her earring drops off again. Peyton does.

Breaking with her POV, the narration shifts to Claire and Michael talking about hiring her. But this cutaway to them has skipped over a crucial bit of action: Peyton has picked up the earring. Unlike Hitchcock, director Curtis Hanson doesn’t give us a close-up of the important object in the antagonist’s hand. In a long shot we simply see Peyton studying her fingers. Some of us will infer what she’s up to; the rest of us will have to wait for the payoff.

After another cutaway to the couple, Peyton “discovers” the earring in the baby’s crib. Her show of concern for his safety seals the hiring deal and begins her long campaign to prove that Claire is an unfit mother.

This elliptical presentation of Peyton’s subterfuges rules the middle section of the film. Selective POV shots suggest what she might do, but we aren’t shown her doing it–only the results. For instance, Claire lays out a red dress for a night out. Peyton sees it, then sees some perfume bottles.

Cut to Claire and then to an arriving guest, and presto. When she returns to the mirror, her dress is suddenly revealed as having a stain.

Later, Claire agrees to send off Michael’s grant application during her round of errands. Once she gets to the Fed Ex office, she will discover the missing envelope. Before that, though, we get another variant of the intrinsic norm showing Peyton’s trickery.

In the greenhouse, while Claire is watering plants, Peyton spots the envelope in her bag. We don’t see her take it, and there’s even a hint that she hasn’t done so. A nifty shot lets us glimpse her yanking her arm away as Claire approaches. It’s not clear that she has anything in her hand.

In what follows, the narration confirms Peyton’s theft while building up the threat level.

If she’s caught, this woman will not go away quietly.

While cozying up to the children–Peyton cuddles with Emma and even secretly breast-feeds baby Joe–Peyton eliminates all of Claire’s allies. By now we know her strategy, so after she suggests to Claire that the handyman Solomon has been molesting little Emma, all the narration needs is to show us Claire discovering a pair of Emma’s underwear in his toolbox. As with Bruno’s pocketing the lighter in Strangers on a Train, we’re now prepared to fill in even more of what’s not shown: here, Peyton framing Solomon.

Michael, a furtive smoker, sometimes shares a cigarette with Marlene. So it’s easy for Peyton to plant Marlene’s lighter in Michael’s sport coat for Claire to discover. Again, the moment of the theft has given her quasi-magical powers. She sees Marlene’s lighter in her handbag in the front seat.

Again thanks to a cutaway, we don’t see her take it. Indeed, it’s hard to see how she could have; she comes out of the back seat with an armload of plants.

But later Marlene will tell Michael (i.e., us) that she’s lost her lighter, and a dry cleaner will find it and show it to Claire.

The attacks have escalated, with Claire now suspecting Michael of infidelity. She confronts him without knowing that Michael has invited friends to a surprise party for her. Her angry accusations are overheard by the guests, and this public display of her anxieties takes her to a new low.

Peyton’s revenge plan is almost wholly consummated, so we stop getting the elliptical POV treatment of her thefts. Instead, the plot shifts to investigations: first Marlene discovers Peyton’s real identity, with unhappy results, and at the climax Claire does. Her POV exploration of the empty Mott house counterbalances Peyton’s early probing of Claire’s household. When she sees Mrs. Ott’s breast pump–another domestic object now invested with dread–she realizes why baby Joe no longer wants her milk.

This is the point, fairly common in the thriller, when the targeted victim turns and fights back.

In The Hand That Rocks the Cradle, a familiar action scheme–someone swipes something and plants it elsewhere–is handled through an unusual narrational norm. The scenes showing Peyton’s pilfering skip a step, and they momentarily let us think like her, nuts though she is. Thanks to editing that deletes one stage of the standard shot pattern, the film trains us to see how banal domestic items, deployed as weapons, can destroy a family. In the course of learning this, maybe the movie makes us feel smart.

Arguably, we’re able to fill in the POV pattern in The Hand That Rocks the Cradle because we’ve learned from encounters with movies that used the standard action scheme, including Strangers on a Train. This is one reason film style has a history. Dissolves get replaced by fades, exposition becomes more roundabout, endings become more open. As audiences learn technical devices, intrinsic norms recast extrinsic ones and some movies become more elliptical, or ambiguous, or misleading. All I’d suggest is that we get accustomed to such changes because films teach us how to understand them. And we enjoy it.

The passage about training us comes from my Narration in the Fiction Film (University of Wisconsin Press, 1985), 45. I discuss norms in blog entries on Summer 85, on Moonrise Kingdom, on Nightmare Alley, and elsewhere. A book I’m finishing applies the concept to novels as well as films.

The example of mismatched reverse angles comes from The Irishman (2019) In the first cut, Frank is starting to settle his coat collar, but in the second, his arms are down and the collar is smooth. In a later portion of that second shot, Hoffa gestures freely with his right hand, but in the over-the-shoulder reverse, his arm is at his side and it’s Frank who gets to make a similar gesture. To be fair, I should say that I found some striking reverse-angle mismatches in Strangers on a Train too.

For more on conventions of the domestic thriller, go to the essay “Murder Culture.”

Radomir D. Kokeš offers an analysis of how Kristin and I have used the concept of norm. We are grateful for his careful discussion of our work and his exposition of the achievement of literary theorist Jan Mukarovský. See “Norms, Forms and Roles: Notes on the Concept of Norm (not just) in Neoformalist Poetics of Cinema,” in Panoptikum (December 2019), available here.

Strangers on a Train (1951).

Can the science of mirror neurons explain the power of camera movement? A guest post by Malcolm Turvey

2001: A Space Odyssey (1968).

DB here:

Over the years we’ve brought you several guest posts from friends whose research we admire. The list includes Matthew Bernstein, Kelley Conway, Leslie Midkiff DeBauche, Eric Dienstfrey, Rory Kelly, Tim Smith, Amanda McQueen, Jim Udden, David Vanden Bossche, and our regular collaborator Jeff Smith (most recently, on Once Upon a Time in Hollywood…).

Today our guest is Malcolm Turvey, Sol Gittleman Professor in Film and Media Studies at Tufts University. Malcolm has long been a voice calling for rigorous humanistic study of film. His books include Doubting Vision: Film and the Revelationist Tradition (2008) and The Filming of Modern Life: European Avant-Garde Film of the 1920s (2011). Just this year he published Play Time: Jacques Tati and Comedic Modernism. (More on this in a future blog entry.) Among his many specialties, Malcolm applies the philosophical tools of conceptual analysis to problems of film criticism and theory.

My previous entry discussed some ways psychological research has helped me understand how films work, and I touched on the recent efforts to invoke mirror neurons to explain some effects that movies have on us. Today Malcolm takes a deeper plunge into this line of thinking, and the result is an exciting instance of how careful intellectual debate can be carried out in film studies. It’s also a cautionary tale about relying on scientific research to explain the appeal of artworks. A more extensive version of this piece is slated for publication in Projections: The Journal of Movies and Mind.

In Alfred Hitchcock’s Notorious (1946), Alex Sebastian (Claude Rains) is one of a group of Nazis who have relocated to Brazil after WWII. He has unwittingly married Alicia Hubermann (Ingrid Bergman), an undercover American agent seeking his circle. When Alicia learns that their scheme somehow involves the wine bottles locked in her husband’s cellar, she decides to procure the key so that she and her handler (and lover), Devlin (Cary Grant), can investigate.

Her opportunity comes in a characteristically suspenseful scene. Alicia nervously contemplates her husband’s keychain lying on a bureau as he finishes taking a shower nearby. She bravely steals the key and barely escapes discovery as Alex emerges from the bathroom. But what is the source of the scene’s suspense?

In a provocative new book titled The Empathic Screen, Vittorio Gallese and Michele Guerra claim the suspense should be attributed primarily to a brief, “human-like” camera movement toward the keys that occurs as Alicia looks at them. Moreover, they draw on neuroscience, specifically the science of mirror neurons, to make their case.

Here is the scene.

Are Gallese and Guerra right about the role played by the camera movement? For reasons I’ll give shortly, I doubt they are. More importantly, I question whether the neuroscientific evidence they lean on supports their case. This may seem like nit-picking, but I think their appeal to neuroscience contains valuable lessons about how students of film should–and shouldn’t–engage with scientific research.

Science and film studies

Readers of this blog are likely familiar with the idea that science has an important role to play in film studies. Since 1985, David Bordwell has drawn on contemporary cognitive psychology to answer questions about perceiving and understanding narrative films, thereby launching a new paradigm in film theory that has come to be known as cognitivism. In his previous entry, David offers an overview of his current thinking about cognition and movies.

Cognitivism has generated much heat in academic film studies. But at its heart is what should be an uncontroversial principle: that those who wish to understand the perceptual, cognitive, and affective capacities with which we engage with art should turn to the work of those who know something about these capacities, namely, the psychologists and other scientists who study them empirically and propose theories about them in light of their findings.

This is because our pre-scientific, “folk” understanding of our psychological capacities is usually non-existent or flawed. It is, for instance, hard to explain why we see still frames as moving images when they are projected above a certain speed merely by reflecting on our “phenomenological” experience of watching movies.

Perceptual psychologists, however, have studied this phenomenon empirically and have proposed explanations for it, such as critical flicker fusion frequency and the phi phenomenon (although like all scientific explanations, these are open to revision and even falsification in the light of new evidence and theorizing). The same is true of other features of our experience of films, and cognitive film scholars have built on David’s work by drawing on contemporary scientific knowledge of emotion, music, moral psychology and much else.

This does not mean that film studies is or should be a science. As David and Kristin’s blog entries repeatedly demonstrate, many of the things we want to know about cinema and the other arts can be discovered through non-scientific, humanistic methods such as analyzing films and researching the context in which they were made. In other words, our methods should be tailored to the questions we ask. Cognitivism argues that some important questions about the cinema can be answered by science, not that all or even most can.

The deceptive authority of Science

That said, I worry that engaging with science in a responsible manner is much more difficult than some of my cognitivist colleagues acknowledge. That difficulty is due, in part, to the “authority” of science.

Because of this authority, many non-scientists might assume that science is more settled than it often is, especially in a human science such as psychology. Those of us who aren’t scientists could be tempted to think that a scientific argument must be true because, well, there are scientific data to support it.

But as the recent “repligate” controversy in psychology and elsewhere shows, just because a scientist can present evidence for their views does not make them true. Data can be unreliable and the conclusions drawn from themunwarranted, as we are witnessing on a daily basis during the coronavirus pandemic.

Although there are occasional scientific revolutions, if science makes progress, something that some ph0ilosophers have questioned, it usually does so incrementally through trial and error, and there is much more failure than success. Those of us who don’t participate in this dialectical process might be apt to forget the provisional, tenuous status of much scientific research and accord it more certainty than it warrants.

Mirror neurons: The controversy

This has happened, I believe, with mirror neurons, a new scientific paradigm that has emerged over the past few decades and that some film scholars have started to draw on to explain some of our responses to films, such as empathizing with characters.

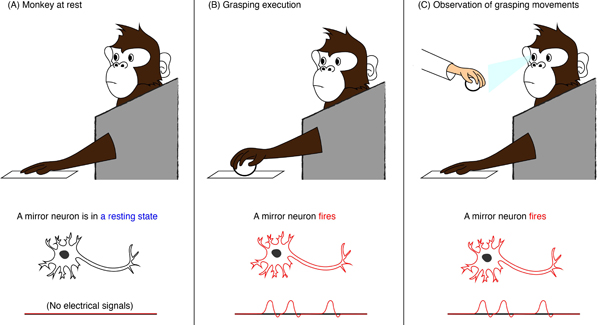

Mirror neurons are neurons that fire both when a subject executes a movement and when the subject sees the same movement executed by another. They were first discovered in the early 1990s in the motor cortex of pigtail macaque monkeys by a group of neuroscientists in Parma, Italy, and they have been invoked to explain a wide array of human behaviors such as language, imitation, empathy, art appreciation, and autism.

This is because mirror neurons appear to provide an explanation for how macaques and human beings understand the actions of their conspecifics. Researchers speculate that, if the same neurons fire when a subject reaches for an object, say food, and when the subject observes another agent reaching for food, the subject must be simulating the observed reaching-for-food action in its brain without actually executing it.

By simulating the observed reaching-for-food movement in its neurons, the subject knows the meaning of the movement–reaching for food–because it has performed the movement itself in the past, and attributes that meaning to the action being executed by the agent it observes, thereby comprehending it. As Marco Iacoboni puts it in a popular treatment of the subject:

To see . . . athletes perform is to perform ourselves. Some of the same neurons that fire when we watch a player catch a ball also fire when we catch a ball ourselves. It is as if by watching, we are also playing the game. We understand the players’ actions because we have a template in our brains for that action, a template based on our own movements.

Yet within neuroscience, mirror-neuron explanations of human behavior are controversial and contested, and no less an authority than Steven Pinker has referred to them as “an extraordinary bubble of hype.” Meanwhile, cognitive scientist Gregory Hickock has written an exhaustive book on the subject with a title that says it all: The Myth of Mirror Neurons.

Film scholars who appeal to the mirror neuron paradigm have not engaged with criticisms of it. Hence, their neural explanations of cinema appear to be supported by a scientific consensus that is in fact lacking, and they may not be drawing on the best current science.

For example, the philosopher of film Dan Shaw maintains that “The discovery of the existence and emotive function of mirror neurons confirms that we simulate other people’s emotions in a variety of ways, even in cinematic contexts,” and he views mirror neurons as the neurophysiological foundation for cinematic empathy. Notice how Shaw makes a strong claim here by using the word “confirms.”

Yet, although Shaw writes that “it is a cliché that monkeys are good imitators,” Pinker suggests that macaques do not imitate and they have “no discernible trace of empathy.” This is despite their possession of mirror neurons. Thus, the mere presence of mirror neurons or a mirror system in a creature cannot be evidence, in and of itself, for the creature’s capacity for empathy. While it might provide one of the neural foundations for empathy or contribute to its realization in some way, much more is needed than mirror neurons or a mirror system for a creature to empathize. Their presence certainly doesn’t “confirm” that we empathize with characters in film.

It is, however, a different, equally pernicious consequence of the authority of science that I wish to highlight here using mirror neurons. The apparent authority of a scientific paradigm can lead scholars to cherry-pick and mischaracterize our artistic practices in order to fit the science. This brings us back to Gallese, one of the co-discoverers of mirror neurons, and Guerra, and their claims about camera movement.

Camera movement and mirror neurons

Gallese and Guerra argue that our mirror neurons not only simulate the emotions of film characters but also the anthropomorphic movements of the camera recording them.

We maintain that the functional mechanism of embodied simulation expressed by the activation of the diverse forms of resonance or neural mirroring discovered in the human brain play an important role in our experience as spectators. Our ability to share attitudes, sensations, and emotions with the actors, and also with the mechanical movements of a camera simulating a human presence, stems from embodied bases that can contribute to clarifying the corporeal representation of the filmic experience. [My emphasis.]

In support of their contention that the mirror neurons of film viewers simulate human-like camera movements, Gallese and Guerra cite an experiment they participated in. This measured the motor cortex activation of nineteen subjects who were shown short video clips of a person grasping an object. The action was filmed in four different ways: with a still camera, a zoom, a dolly, and a Steadicam.

“The results were positive,” Gallese and Guerra conclude. “Shortening the distance between the participant and the scene by moving the camera closer to the actor or actress resulted in a stronger activation of the motor simulation mechanism expressed by the mirror neurons” relative to the clips shot with a still camera. Moreover, the subjects rated those scenes filmed with a moving camera as more “involving.”

Gallese and Guerra rely on this single experiment to make a bold argument about the role of camera movement in the design of films and its effect on the experience of film viewers. “The involvement of the average spectator [in a film] is directly proportional to the intensity of camera movements.” This is because “the sense of participation in the camera action is undoubtedly enhanced by the fact that its behavior is interpreted by both filmmakers and spectators according to evident and automatic anthropomorphological analogies.”

When scenes are recorded with human-like camera movements, they seem to be suggesting, our mirror neurons simulate these camera movements because they are like actions we ourselves have performed. This in turn results in a feeling of “involvement” or “participation” in the scene on the part of the film spectator.

There is much one could question about this experiment and the conclusions Gallese and Guerra draw from it. For example, if it is camera movement that elicits mirror neuron simulation which in turn gives rise to a sense of immersion, viewers should feel involved in the camera movement, not the sceneit films. Indeed, what is depicted in the scene should be irrelevant to the viewer’s feeling of involvement if it is the camera movement that gives rise to this feeling by eliciting mirror neuron simulation.

Making the art fit the science

It is, however, the theory’s cherry-picking and mischaracterization of the artistic practice of cinema that most concern me here. According to this theory, as we have seen, “the involvement of the average spectator [in a film] is directly proportional to the intensity of camera movements.” The explanatory claim is that anthropomorphic camera movements, in other words those that resemble the human action of walking through space, elicit the mirror neuron simulation that gives rise to the viewer’s immersion in the film.

This is a strong claim, and if it were true it would have profound implications for both the study of film and filmmaking. It would mean that the more anthropomorphic camera movements that films contain, the more involving they would be for viewers. It would also mean that scenes filmed with human-like camera movements would be more involving than those shot with a still camera, and that scenes filmed with non-anthropomorphic camera movements would be less involving than those shot with anthropomorphic ones.

The latter is due to the mirror neuron simulation theory’s argument that we can only simulate movements we ourselves have performed. Hence, camera movements must be like ones we have executed ourselves in order for our mirror neurons to simulate them and produce the requisite sense of immersion.

Before assessing this claim, one question needs to be answered. What, exactly, is meant by involvement, participation and immersion? After all, there are a number of different kinds of possible involvement in a film.

We can be cognitively immersed in a film when we are intensely interested in the outcome of the plot or the revelations of a documentary. We can also be emotionally involved, as when we feel strong emotions toward the people or events depicted in the film. There is also aesthetic involvement when we pay close attention to and evaluate the design properties of a film. Then there’s physical or corporeal involvement, as when we are physically impacted by film techniques, such as the startle effect or bright lights and loud sounds. Doubtless there are other kinds of involvement too.

Gallese and Guerra never explicitly define what they mean by involvement, although on occasion they mention a “sensation of immersion in the spatiotemporal dimension of film.” In an earlier text they claimed that, by using the camera to mimic bodily movement, filmmakers make audiences feel that “we are inside the diegetic world, we experience the movie from a sensory-motor perspective and we behave ‘as if’ we were experiencing a real life situation.”

Personally, I am not sure what they mean by this. I have never felt immersed in the space and time of a film in the sense of somehow thinking or feeling that I am actually inside it. More importantly, it is not clear that the subjects of the experiment on which their theory is based meant spatiotemporal involvement as opposed to cognitive, emotive, aesthetic or other kinds of immersion when they rated how involved they felt in the clips they were shown. Thus, Gallese and Guerra’s experiment may provide no empirical evidence at all for their occasional references to spatiotemporal participation.

Confusingly, however, Gallese and Guerra sometimes seem to mean involvement in another sense of the term I have clarified. Regarding the brief camera movement toward the keychain in the scene from Notorious of Alicia stealing the key, they contend that “The problem that Hitchcock had to solve in this complex sequence was how to bring the spectator to an almost unbearable level of suspense.” They suggest that if Hitchcock had only used “classical editing” to film the scene, “our level of involvement would not be nearly so high.” They conclude: “This is why Hitchcock uses camera movement; it is this movement that creates the overpowering tension.”

Here, Gallese and Guerra seem to be using “involvement” in the emotive sense of feeling strong emotions such as “tension” and “suspense” about the characters and events in the scene, and they make no mention of “spatiotemporal immersion.” Either way, Gallese and Guerra provide no evidence at all that it is this brief camera movement “that creates the overpowering tension” in the scene. While the camera movement is certainly effective in drawing our attention to the keys and conveying Alicia’s anxious focus on them, I conjecture that there is a far more obvious, broadly “cognitive” reason for the scene’s suspense.

This is the possibility that Alicia, with whom we sympathize, will be caught stealing the key by her husband, who is a ruthless Nazi. Gallese and Guerra, however, discount such a narrative-based explanation for the suspense. They argue that “What strikes us most in films like Notorious is Hitchcock’s almost complete indifference to the plot” and that “the state of suspense in which we find ourselves at every viewing of Notorious has nothing whatsoever to do with the story.”

Yet, according to Hitchcock biographer Donald Spoto, Hitchcock himself wrote the outline for Notorious in late 1944, and then spent three weeks closeted with Ben Hecht writing the script, which was further modified in late-night script sessions with David O. Selznick before the project was eventually sold to RKO and filming began in October 1945. While it may be true that Hitchcock tended to see the plots of his films as merely a means to creating the arresting images and eliciting the strong emotions from his audiences that truly interested him, this does not mean he was “indifferent” to plot. He spent considerable time developing his scripts, and he was keen to work with talented screenwriters such as Hecht.

Nor is it plausible that the suspense in Notorious “has nothing whatsoever to do with the story.” Indeed, suspense is usually defined as a state of anxious uncertainty about what will happen in the story. If suspense has nothing to do with the story, it is hard to know what viewers feel suspense about. In the key-stealing scene in Notorious, it is surely the case that we are anxious about the possibility that Alicia will be caught in the act of stealing the key by her husband, an event that might happen in the narrative. If not, what else might the suspense be directed at?

Of course, the suspense in this scene is not solely dependent on the story but also on the scene’s style. But there are other stylistic techniques that play a much bigger role than the camera movement in the creation of suspense in the scene. For example, Alex’s shadow is visible on his partially open door as he towels himself dry and moves around the shower room.

This activity suggests that he has finished his shower and will emerge at any second. It starts to seem more likely that Alicia will be caught stealing the key, thereby intensifying the suspense. Meanwhile, other than delaying the scene’s outcome by a few seconds, it is not clear how the camera movement toward the keychain itself intensifies the scene’s suspense.

What is happening in the narrative and what is conveyed by other stylistic techniques, such as Alex’s shadow on the door and the music on the soundtrack, are the factors that intensify the scene’s tension. It seems highly unlikely that “our level of involvement would not be nearly so high” in the absence of the camera movement, in the sense of feeling tension and suspense about the scene’s outcome. Certainly, Gallese and Guerra provide no evidence to this effect.

Furthermore, as I’ve already mentioned, Gallese and Guerra’s theory at best explains our sense of involvement in the camera movement, not the scene it films. Recall that it is the camera movement itself that our mirror neurons simulate. It is unclear from their theory, therefore, how our putative feeling of immersion in the camera movement, if indeed we do feel immersed in it, can yield suspense about the concrete actions being filmed.

The category of human-like camera movement, if such a category is functionally relevant to cinema, comprises many fine-grained variations with different effects. Camera movements, for example, can elicit curiosity by making us wonder what they will reveal, as well as surprise when they come to rest on something unexpected. They can startle through their rapidity, as when swish pans suddenly disclose something off-screen, or they can uncover information at an agonizingly slow pace. They can reveal characters’ mental states through push-ins that show us that a character is concentrating hard on something, or they can hide information from us by moving away from it. They can also be aesthetically pleasing, as when we marvel at their gracefulness or the intricacy with which their movements are coordinated with those of the characters. None of these sources of the power of camera movement are explained by arguing that mirror neurons fire in response to anthropomorphic camera movements, if indeed they do.

Most broadly, as David points out in his previous entry, there is much that their theory cannot capture or explain about the power of framing. A static camera can evoke tremendous suspense, as David’s example from Hou’s Summer at Grandpa’s illustrates. It is hard to see what a tracking shot would add at such a moment.

The lessons of film history

Of course, just as damaging to the theory are the countless examples from the rich history of film of highly suspenseful, tension-filled, and in other ways involving scenes that lack anthropomorphic camera movements or that contain non-anthropomorphic ones.

In Notorious, right after the scene in which Alicia steals the key and is nearly caught by Alex, there is a crane shot in which the camera, having panned across the party in the hallway below from a first-floor landing, glides smoothly down toward Alicia talking to Alex and some guests in the hallway and ends in a close-up on her hand holding the key to the cellar.

Given that no human being could perform this movement, our mirror neurons shouldn’t be able to simulate it and produce a feeling of involvement in it. Yet, I hypothesize that, for most viewers, this camera movement creates a strong sense of cognitive, emotive, aesthetic, and other forms of engagement in the scene.

Among other things, this movement prompts us to wonder how Alicia is going to gain entry to the wine cellar without her husband noticing, thereby intensifying our sympathetic concern for her and our suspense about whether she will be caught. And in its overtness, it might make us think about Hitchcock’s choice of technique and the reasons behind it. For some, it might even induce that sense of “spatiotemporal immersion” that Gallese and Guerra mention given that it brings our perceptual perspective close to Alicia’s in the midst of the party, although I personally don’t feel this.

Either way, in this case, it cannot be due to mirror neuron simulation that we experience these forms of immersion. This is a non-anthropomorphic camera movement that human beings cannot execute themselves and therefore cannot simulate with their mirror neurons.

An example from a film by a different director would be the shots in 2001: A Space Odyssey (Stanley Kubrick, 1968) of Dr Floyd (William Sylvester)’s ship docking with a space station while Strauss’s Blue Danubewaltz plays on the soundtrack. (See image surmounting today’s entry.) For many, the smooth camera movements through space in this sequence evoke an intense sense of weightlessness, thereby creating a physical or corporeal form of involvement in the film. Yet, very few of us have moved in space or have experienced weightlessness, meaning that our mirror neurons should not be able to simulate these camera movements.

Then there are the copious examples of involving scenes that lack camera movement. Hitchcock’s oeuvre contains many, such as the infamous shower scene in Psycho (1960), which is devoid of camera movement during the 20 seconds or so in which the stabbing of Marion Crane takes place. Another example is the highly suspenseful sequence in Strangers on a Train (1951) in which Bruno (Robert Walker) drops Guy (Farley Granger)’s cigarette lighter down a drain while he is on his way to plant the lighter at the amusement park where he murdered Guy’s wife, Miriam (Kasey Rogers). Bruno wishes to implicate Guy in the murder, and Guy, who is a professional tennis player, has guessed Bruno’s plan and is trying to complete a tennis match in time to stop him from planting the lighter. Hitchcock cuts back and forth between the tennis match and Bruno’s efforts to reach down into the drain and retrieve the lighter.

Interestingly, while there is some camera movement at the beginning of the sequence, as the suspense builds, the camera movement lessens. Hitchcock relies largely on still shots of Bruno’s grimacing face as he reaches into the grate, his hand inside the grate and the lighter below it, and the faces of the referees and spectators at the tennis match. Only the shots of Guy and the other tennis player contain a little movement when the camera slightly reframes them as they move to hit the tennis ball.

This sequence is considered one of the most suspenseful (and thereby emotionally involving) in Hitchcock’s oeuvre, yet it defies Gallese and Guerra’s prediction that “The involvement of the average spectator [in a film] is directly proportional to the intensity of camera movements.”

So does the astonishing sequence in William Wyler’s The Little Foxes (1941) in which Horace (Herbert Marshall), having told his estranged wife, Regina (Bette Davis), of his plans to leave his fortune to their daughter, begins experiencing painful symptoms of his heart disease as she tells him how much she despises him.

Horace reaches for his heart medication but knocks over the bottle and its contents and begs his wife to fetch another bottle of medication from upstairs. She, however, sits immobile while Horace, realizing she will not help him and wants him to die, staggers around her, clinging to the wall, and stumbles upstairs before collapsing on the staircase. An agonizing long take lasting about forty seconds shows Regina in medium shot sitting while her husband, who is out of focus, moves toward the stairs, and the shot is immobile except for slight reframings to keep Horace partly visible in the shot.

This is an intensely suspenseful, emotionally involving moment as we wonder whether Horace will reach his medication in time, or Regina or someone else will take action to help him. Yet, there is no anthropomorphic camera movement to elicit our sense of involvement in the scene. On the contrary, according to many critics, it is precisely the lack of camera movement that contributes to its emotional intensity. As André Bazin noted, “Nothing could better heighten the dramatic power of this scene than the absolute immobility of the camera,” in part because it mirrors and emphasizes the “criminal inaction” of Horace’s wife, Regina, who is hoping he will fail to reach his medication and die so that she can be rid of him and claim his fortune.

Gallese and Guerra could perhaps protest that I have misconstrued their theory. Although their claim that “The involvement of the average spectator [in a film] is directly proportional to the intensity of camera movements” seems to suggest that anthropomorphic camera movement is both a necessary and sufficient condition for occasioning involvement in a film, they might admit that immersion can be elicited in other ways. Instead, they could allow, human-like camera movement is merely a sufficient condition for involvement, not a necessary one. When it is present, we feel immersed in films, although this is not the only route to immersion.

However, it is not difficult to think of films containing lots of camera movement of both the anthropomorphic and non-anthropomorphic kinds that fail to engage viewers. An example is Kenneth Branagh’s Mary Shelley’s Frankenstein (1994), a film in which, as David Ansen of Newsweek put it, “The camera, and the actors, are always in a mad dash from here to there” (Ansen 1994). Nevertheless critics, who according to Gallese and Guerra “usually get much more excited” when camera movement is present, largely panned the film. (For what it’s worth, the film has a critics’ score of 38% and audience score of 49% on Rotten Tomatoes.) As Ansen put it:

What we get is Romanticism for short attention spans; a lavishly decorated horror movie with excellent elocution. [Branagh’s] strategy undermines itself–there’s a lot of sound and fury, but all the grand passions are indicated rather than felt. Watching the movie work itself into an operatic frenzy, one remains curiously detached: the grand gestures are there, but where’s the music? [My emphasis.]

Anthropomorphic camera movements do not, therefore, even appear to be a sufficient condition for immersion in a film, let alone a necessary one.

The way forward?

Gallese and Guerra, it seems to me, cherry-pick examples from films that appear to support their theory, and ignore obvious counterexamples even in the films they examine. They also mischaracterize scenes such as the one in which Alicia steals the keys, overlooking other evident sources of “involvement,” a term they fail to define consistently. They do, I suspect, because they are in thrall to the mirror neuron theory, and they therefore force the art to fit the theory.

None of this means that cognitivism should be abandoned and that film scholars shouldn’t be turning to science. Nor does it mean that camera movement doesn’t sometimes elicit and intensify emotions such as suspense. Moreover, neuroscience may well be able to shed light on some of the reasons why this happens, although many more experiments are needed to demonstrate this than the single one relied on by Gallese and Guerra.

But it does suggest that our engagement with the sciences should be governed by two principles.

First, when drawing on a scientific theory, it is crucial that film scholars also consider criticisms of it. Despite its authority, scientific research is typically provisional. Those of us in the humanities are not usually in a position to determine who is right in a scientific debate. So we should entertain criticisms of the scientific theory, in case they reveal pitfalls and other problems in applying the theory to cinema, or show the theory to be on far less secure ground than it may seem to be.

Second, those of us who are humanistic scholars of film should trust the knowledge we have gleaned from decades of work on the cinema. We shouldn’t simply accept conclusions that contradict this knowledge because they are supposedly scientific. We should not, in other words, be cowed by the authority of science. While we haven’t gotten everything right, most of us know from a little reflection, for example, that anthropomorphic camera movements aren’t required for greater “involvement” in a film.