Watching you watch THERE WILL BE BLOOD

Monday | February 14, 2011 open printable version

open printable version

DB here:

Today’s entry is our first guest blog. It follows naturally from the last entry on how our eyes scan and sample images. Tim Smith is a psychological researcher particularly interested in how movie viewers watch. You can follow his work on his blog Continuity Boy and his research site.

I asked Tim to develop some of his ideas for our readers, and he obliged by providing an experiment that takes off from my analysis of staging in one scene of There Will Be Blood, posted here back in 2008. The result is almost unprecedented in film studies, I think: an effort to test a critic’s analysis against measurable effects of a movie. What follows may well change the way you think about visual storytelling.

Tim’s colorful findings also suggest how research into art can benefit from merging humanistic and social-scientific inquiry. Kristin and I thank Tim for his willingness to share his work.

Tim Smith writes:

David’s previous post provided a nice introduction to eye tracking and its possible significance for understanding film viewing. Now it is my job to show you what we can do with it.

Continuity errors: How they escape us

Knowing where a viewer is looking is critical to beginning to understand how a viewer experiences a film. Only the visual information at the centre of attention can be perceived in detail and encoded in memory. Peripheral information is processed in much less detail and mostly contributes to our perception of space, movement and general categorisation and layout of a scene.

The incredibly reductive nature of visual attention explains why large changes can occur in a visual scene without our noticing. Clear examples of this are the glaring continuity errors found in some films. Lighting that changes throughout a scene, cigarettes that never burn down, and drinks that instantly refill plague films and television but we rarely notice them except on repeated or more deliberate viewing. In my PhD thesis I created a taxonomy of continuity errors in feature films and related them to various failings during pre-production, filming, and post-production.

Our inability to detect continuity errors was elegantly demonstrated in a study by Dan Levin and Dan Simons. In their study continuity errors were purposefully introduced into a film sequence of two women conversing across a dinner table. If you haven’t seen it before, watch the video here before continuing, and see how many continuity errors you can spot.

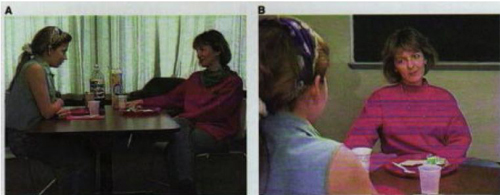

Two frames from the clip used by Levin and Simons (1997). Continuity errors were deliberately inserted across cuts (e.g., the disappearing scarf), and viewers were asked after watching the video whether they noticed any.

The short clip contained nine continuity errors, such as a scarf that changed colour, then disappeared, plates that changed colour and hands that changed position. During the first viewing, viewers were told to pay close attention but were not informed about the continuity errors. When asked afterwards if they noticed anything change, only one participant reported seeing anything and that was a vague sense that the posture of the actors changed. Even during a second viewing in which they were instructed to detect changes, viewers only detected an average of 2 out of the 9 changes and tended to notice changes closest to the actors’ faces such as the scarf.

Although Levin and Simons did not record viewer eye movements, my own experiments investigating gaze behaviour during film viewing indicate that our eyes will mostly be focussed on faces and spend virtually no time on peripheral details. If you as a viewer don’t fixate a peripheral object such as the plate, you are unable to represent the colour of the plate in memory and can, therefore not detect the change in colour when you later refixate it.

Tracking gaze

To see how reductive and tightly focused our gaze is whilst watching a film, consider Paul Thomas Anderson’s There Will Be Blood (TWBB; 2007). In an earlier post, David used a scene from this film as an example of how staging can be used to direct viewer attention without the need for editing.

The scene depicts Paul Sunday describing the location of his family farm on a map to Daniel Plainview, his partner Fletcher Hamilton, and his son H.W. The entire scene is treated in a long, static shot (with a slight movement in at the beginning). Most modern film and television productions would use rapid editing and close-up shots to shift attention between the map and the characters within this scene. This frenetic style of filmmaking–which David termed intensified continuity in his book The Way Hollywood Tells It (2006)–breaks a scene down into a succession of many viewpoints, rapidly and forcefully presented to the viewer.

Intensified continuity is in stark contrast to the long-take style used in this scene from TWBB. The long-take style, which was common in the 1910s and recurred at intervals after that period, relies more on staging and compositional techniques to guide viewer attention within a prolonged shot. For example, lighting, colour, and focal depth can guide viewer attention within the frame, prioritising certain parts of the scene over others. However, even without such compositional techniques, the director can still influence viewer attention by co-opting natural biases in our attention: our sensitivity to faces, hands, and movement.

In order to see these biases in action during TWBB we need to record viewer eye movements. In a small pilot study, I recorded the eye movements of 11 adults using an Eyelink 1000 (SR Research) eyetracker. This eyetracker uses an infrared camera to accurately track the viewer’s pupil every millisecond. The movements of the pupil are then analysed to identify fixations, when the eyes are relatively still and visual processing happens; saccadic eye movements (saccades), when the eyes quickly move between locations and visual processing shuts down; smooth pursuit movements, when we process a moving object; and blinks.

Eye movements on their own can be interesting for drawing inferences about cognitive processing, but when thinking about film viewing, where a viewer looks is of most interest. As David demonstrated in his last post, analysing where a viewer looks whilst viewing a static scene, such as Repin’s painting An Unexpected Visitor, is relatively simple. The gaze of a viewer can be plotted on to the static image and the time spent looking at each region, such as a characters face or an object in the scene can be measured.

However, when the scene is moving, it is much more difficult to relate the gaze of a viewer on the screen to objects in the scene. To overcome this difficulty, my colleagues and I developed new visualisation techniques and analysis tools. These efforts were part of a large project investigating eye movement behaviour during film and TV viewing (Dynamic Images and Eye Movements, what we call the DIEM project). These techniques allow us to capture the dynamics of gaze during film viewing and display it in all its fascinating, frenetic glory.

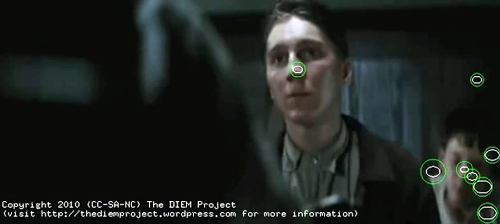

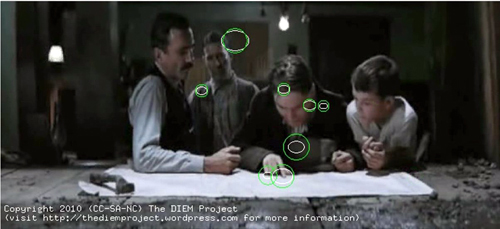

To begin, the gaze location of each viewer is placed as a point on the corresponding frame of the movie. The point is represented as a circle with the size of the circle denoting how long the eyes have remained in the same location, i.e. fixated that location. We then add the gaze location of all viewers on to the same frame. Although the viewers watched the clip at different times, plotting all viewers together allows us to look for similarities and differences between where people look and when they look there. This figure shows the gaze location of 8 viewers at one moment in the scene. (The remaining 3 viewers are blinking at this moment.)

A snapshot of gaze locations of 8 viewers whilst watching the “map” sequence from There Will Be Blood (2007). Each green circle represents the gaze location of one participant, with the size of the circle indicating how long the eyes have been in fixation (bigger equals longer).

You have a roving eye

Plotting static gaze points onto a single frame of the movie allows us to see what viewers were looking at in a particular frame, but we don’t get a true sense of how we watch movies until we animate the gaze on top of the movie as it plays back. Here is a video of the entire sequence from TWBB with superimposed gaze of 11 viewers.

You can also see it here. The main table-top map sequence we are interested begins at 3 minutes, 37 seconds.

The most striking feature of the gaze behaviour when it is animated in this way is the very fast pace at which we shift our eyes around the screen. On average, each fixation is about 300 milliseconds in duration. (A millisecond is a thousandth of a second.) Amazingly, that means that each fixation of the fovea lasts only about 1/3 of a second. These fixations are separated by even briefer saccadic eye movements, taking between 15 and 30 milliseconds!

Looking at these patterns, our gaze may appear unusually busy and erratic, but we’re moving our eyes like this every moment of our waking lives. We are not aware of the frenetic pace of our attention because we are effectively blind every time we saccade between locations. This process is known as saccadic suppression. Our visual system automatically stitches together the information encoded during each fixation to effortlessly create the perception of a constant, stable scene.

In other experiments with static scenes, my colleagues and I have shown that even if the overall scene is hidden 150milliseconds into every fixation, we are still able to move our eyes around and find a desired object. Our visual system is built to deal with such disruptions and perceive a coherent world from fragments of information encoded during each fixation.

The second most striking observation you may have about the video is how coordinated the gaze of multiple viewers is. Most of the time, all viewers are looking in a similar place. This is a phenomenon I have termed Attentional Synchrony. If several viewers examine a static scene like the Repin painting discussed in David’s last post, they will look in similar places, but not at the same time. Yet as soon as the image moves, we get a high degree of attentional synchrony. Something about the dynamics of a moving scene leads to all viewers looking at the same place, at the same time.

The main factors influencing gaze can be divided into bottom-up involuntary control by the visual scene and top-down voluntary control by the viewer’s intentions, desires, and prior experience. As part of the DIEM project we were able to identify the influence of bottom-up factors on gaze during film viewing using computer vision techniques. These techniques allowed us to dissect a sequence of film into its visual constituents such as colour, brightness, edges, and motion. We found that moments of attentional synchrony can be predicted by points of motion within an otherwise static scene (i.e. motion contrast).

You can see this for yourself when you watch the gaze video. Viewers’ gazes are attracted by the sudden appearance of objects, moving hands, heads, and bodies. The greater the motion contrast between the point of motion and the static background, the more likely viewers will look at it. If there is only one point of motion at a particular moment, then all viewers will look at the motion, creating attentional synchrony.

This is a powerful technique for guiding attention through a film. But it’s of course not unique to film. Noticing points of motion is a natural bias which we have evolved by living in the real world. If we were not sensitive to peripheral motion, then the tiger in the bushes might have killed our ancestors before they had chance to pass their genes down to us.

But points of motion do not exist in film without an object executing the movement. This brings us to David’s earlier analysis of the staging of this sequence from TWBB. This might be a good time to go back and read David’s analysis before we begin testing his hypotheses with eyetracking. Is David right in predicting that, even in the absence of other compositional techniques such as lighting, camera movement, and editing, viewer attention during this sequence is tightly controlled by staging?

All together now

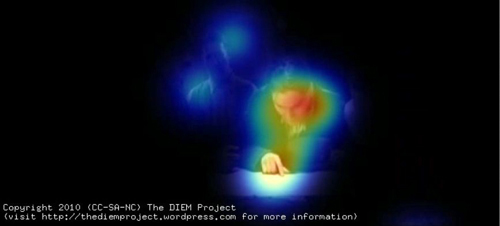

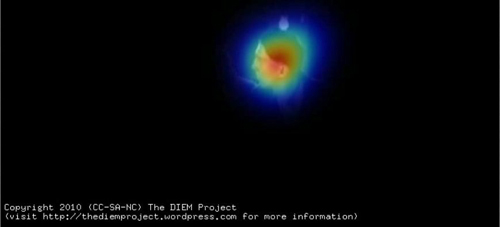

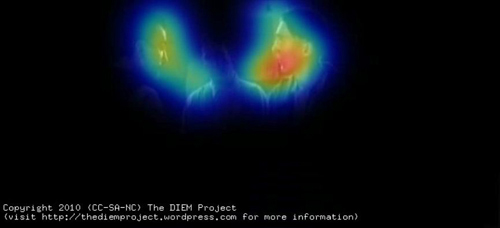

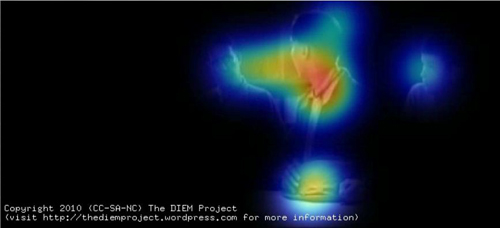

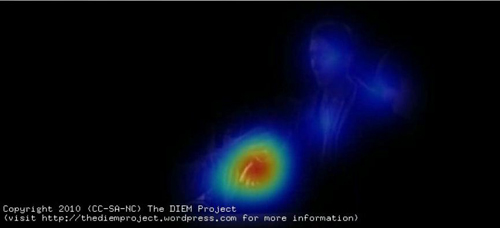

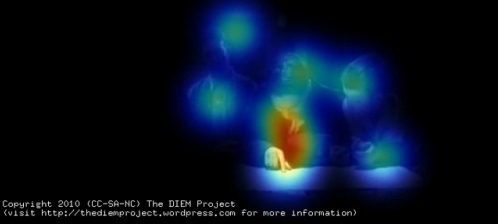

To help us test David’s hypotheses I am going to perform a little visualisation trick. Making sense of where people are looking by observing a swarm of gaze points can often be very tricky. To simplify things we can create a “peekthrough” heatmap. A virtual spotlight is cast around each gaze point. This spotlight casts a cold, blue light on the area around the gaze point. If the gazes of multiple viewers are in the same location their spotlights combine and create a hotter/redder heatmap. Areas of the frame that are unattended remain black. By then removing the gaze points but leaving the heatmap we get a “peekthrough” to the movie which allows us to clearly see which parts of the frame are at the centre of attention, which are ignored and how coordinated viewer gaze is.

Here is the resulting peekthrough video; also available here. The map sequence begins at 3:38.

Here is the image of gaze location I showed above, now matched to the same frame of the peekthrough video.

The gaze data from multiple viewers is used to create a “peekthrough” heatmap in which each gaze location shines a virtual spotlight on the film frame. Any part of the frame not attended is black, and the more viewers look in the same location, the hotter the color.

David’s first hypothesis about the map sequence is that the faces and hands of the actors command our attention. This is immediately apparent from the peekthrough video. Most gaze is focused on faces, shifting between them as the conversation switches from one character to another.

The map receives a few brief fixations at the beginning of the scene but the viewers quickly realise that it is devoid of information and spend the remainder of the scene looking at faces. The only time the map is fixated is when one of the characters gestures towards it (as above).

We can see the effect of turn-taking in the conversation on viewer attention by analyzing a few exchanges. The sequence begins with Paul pointing at the map and describing the location of his family farm to Daniel. Most viewers’ gazes are focused on Paul’s face as he talks, with some glances to other faces and the rest of the scene. When Paul points to the map, our gaze is channeled between his face and what he is gazing/pointing at.

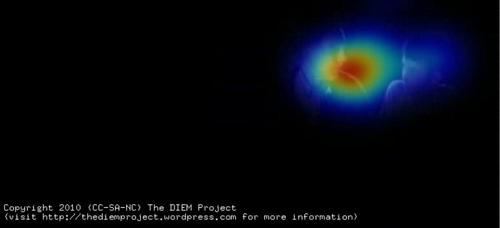

Such gaze prompting and gesturing are powerful social cues for attention, directing attention along a person’s sightline to the target of their gaze or gesture. Gaze cues form the basis of a lot of editing conventions such as the match an action, shot/reverse-shot dialogue pairings, and point-of-view shots. However, in this scene gaze cuing is used in its more natural form to cue viewer attention within a single shot rather than across cuts.

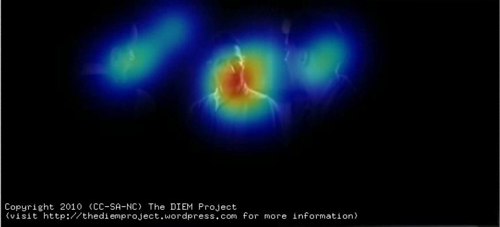

As Paul finishes giving directions, Daniel asks him a question which immediately results in all viewers shifting the gaze to Daniel’s face. Gaze then alternates between Daniel and Paul as the conversation passes between them. The viewers are both watching the speaker to see what he is saying and also monitoring the listener’s responses in the form of facial expressions and body movement.

Daniel turns his back to the camera, creating a conflict between where the viewer wants to look (Daniel’s face) and what they can see (the back of his head). As David rightly predicted, by removing the current target of our attention the probability that we attend to other parts of the scene is increased, such as H. W., who up until this point has not played a role in the interaction. Viewers begin glancing towards HW and then quickly shift their gaze to him when he asks Paul how many sisters he has.

Gaze returns to Paul as he responds.

Gaze shifts from Paul to Daniel as he asks a short question, and then moves to Fletcher as he joins the conversation.

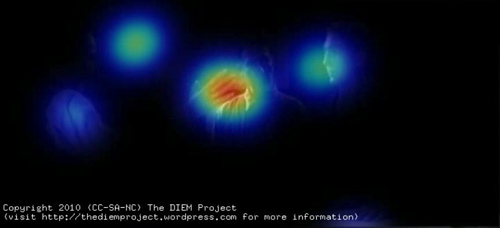

The quick exchanges of dialogue ensure that viewers only have enough time to shift their gaze to the speaker and then shift to the respondent. When gaze dwells longer on a speaker, such as during the exchange between Fletcher and Paul, there is an increase in glances away from the speaker to other parts of the scene such as the other silent faces or objects.

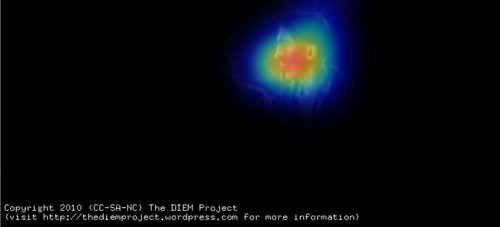

An object that receives more fixations as the scene develops is Paul’s hat, which he nervously fiddles with. At one point, when responding to Fletcher’s question about what they grow on the farm, Paul glances down at his hat. This triggers a large shift of viewer gaze, which slides down to the hat. Likewise, a subtle turn of the head creates a highly significant cue for viewers, steering them towards what Paul is looking at while also conveying his uneasiness.

The most subtle gesture of the scene comes soon after as Fletcher asks about water at the farm. Paul states that the water is generally salty and as he speaks Fletcher shifts his eyes slightly in the direction of Daniel. This subtle movement is enough to cue three of the viewers to shift their gaze to Daniel, registering their silent exchange.

This small piece of information seems critical to Daniel and Fletcher’s decision to follow up Paul’s lead, but its significance can be registered by viewers only if they happened to be fixating Fletcher at the time he glanced at Daniel. The majority of viewers are looking at Paul as he speaks and they miss the gesture. For these viewers, the significance of the statement may be lost, or they may have to deduce the significance either from their own understanding of oil prospecting or other information exchanged during the scene.

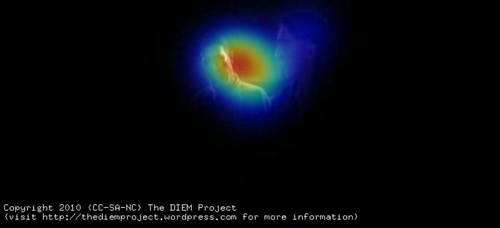

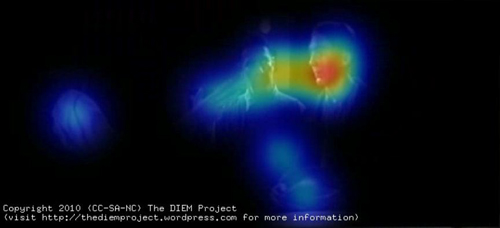

The final and most significant gesture of the scene is Daniel’s threatening raised hand. As Paul goes to leave, Daniel stalls him by raising his hand centre frame in a confusing gesture hovering midway between a menacing attack and a friendly handshake. In David’s earlier post he predicted that the hand would “command our attention.” Viewer gaze data confirm this prediction. Daniel draws all gazes to him as he abruptly states “Listen….Paul,” and lifts his hand.

Gaze then shifts quickly; the raised hand becomes a stopping off point on the way to Paul’s face. . .

. . . finally following Daniel’s hand down as he grasps Paul’s in a handshake.

We like to watch

The rapid sequence of actions clearly guide our attention around the scene: Daniel – Hand -Paul – Hand. David’s analysis of how the staging in this scene tightly controls viewer attention was spot-on and can be confirmed by eyetracking. At any one moment in the scene there is a principal action signified either by dialogue or motion. By minimising background distractions and staging the scene in a clear sequential manner using basic principles of visual attention, P. T. Anderson has created a scene which commands viewer attention as precisely as a rapidly edited sequence of close-up shots.

The benefit of using a single long shot is the illusion of volition. Viewers think they are free to look where they want but, due to the subtle influence of the director and actors, where they want to look is also where the director wants them to look. A single static long shot also creates a sense of space, clear relationship between the characters, and a calm, slow pace which is critical for the rest of the film. The same scene edited into close-ups would have left the viewer with a completely different interpretation of the scene.

I hope I’ve shown how some questions about film form, style, practice, and spectatorship can be informed by borrowing theory and methods from cognitive psychology. The techniques I have utilised in recording viewer gaze and relating it to the visual content of a film are the same methods I would use if I was conducting an experiment on a seemingly unrelated topic such as visual search. (See this paper for an example.)

The key difference is that the present analysis is exploratory and simply describes the viewing behaviour during an existing clip. What we cannot conclude from such a study is which aspects of the scene are critical for the gaze behaviour we observe. For instance, how important is the dialogue for guiding attention? To investigate the contribution of individual factors such as dialogue we need to manipulate the film and test how gaze behaviour changes when we add or remove a factor. This type of empirical manipulation is critical to furthering our understanding of film cognition and employing all of the tools cognitive psychology has to offer.

But I expect an objection. Isn’t this sort of empirical inquiry too reductive to capture the complexities of film viewing? In some respects, yes. This is what we do. Reducing complex processes down to simple, manageable, and controllable chunks is the main principle of empirical psychology. Understanding a psychological process begins with formalizing what it and its constituent parts are, and then systematically manipulating and testing their effect. If we are to understand something as complex as how we experience film we must apply the same techniques.

As in all empirical psychology the danger is always that we lose sight of the forest whilst measuring the trees. This is why the partnership between film theorists and empiricists like myself is critical. The decades of film theory, analysis, practice and intuition provide the framework and “Big Picture” to which we empiricists contribute. By sharing forces and combining perspectives, we can aid each other’s understanding of the film experience without losing sight of the majesty that drew us to cinema in the first place.

On the importance of foveal detail for memory encoding, see J. M. Findlay, Eye scanning and visual search, in The Interface of Language, Vision, and Action: Eye movements and the visual world, ed. J.M. Henderson and F. Ferreira (New York: Psychology Press, 2004), pp. 134-159. Levin and Simons’ continuity-error experiment is explained in D. T. Levin and D. J. Simons, “Failure to detect changes to attended objects in motion pictures,” Psychonomic Bulletin and Review4 (1997), pp. 501-506.

A note about our equipment and experimental procedure. We presented the film on a 21 inch CRT monitor at a distance of 90cm and a resolution of 720×328, 25fps. Eye movements were recorded using an Eyelink 1000 eyetracker and a chinrest to keep the viewer’s head still. This eye tracker consists of a bank of infrared LEDs used to illuminate the participant’s face and a high-speed infrared camera filming the face. The infrared light reflects of the face but not the pupil, creating a dark spot that the eyetracker follows. The eyetracker also detects the infrared reflecting off the outside of the eye (the cornea) which appears as a “glint”. By analysing how the glint and the centre of the pupil move as the viewer looks around the screen the eyetracker is able to calculate where the viewer is looking every millisecond.

As for the heatmaps, the greater the number of viewers, the more consistent the heatmaps. The present pilot study used gaze from only 11 viewers, which introduces a lot of noise into the visualisations. Compare the scattered nature of the gaze in the TWBB video to a similar scene visualised with the gaze of 48 viewers. We would probably see the same degree of coordination in the TWBB clip if we had used more viewers.

For a comprehensive discussion of attentional synchrony and its cause, see Mital, P.K., Smith, T. J., Hill, R. and Henderson, J. M., “Clustering of gaze during dynamic scene viewing is predicted by motion,” Cognitive Computation (in press). Social cues for attention, like shared looks, are discussed in Langton, S. R. H., Watt, R. J., & Bruce, V., “Do the eyes have it? Cues to the direction of social attention,” Trends in Cognitive Sciences 4, 2, pp. 50-59. For more on our inability to detect small discontinuities, see Smith, T. J. and Henderson, J. M., “Edit Blindness: The relationship between attention and global change blindness in dynamic scenes,” Journal of Eye Movement Research (2008) 2 (2), 6, pp. 1-17.

For further information on the Dynamic Images and Eye Movement project (DIEM) please visit http://thediemproject.wordpress.com/. This research was funded by the Leverhulme Trust (Grant Ref F/00-158/BZ) and the ESRC (RES 062-23-1092). To view more visualisations from the project visit this site. The DIEM project partners are myself, Prof. John M Henderson, Parag Mital, and Dr. Robin Hill. Gaze data and visualisation tools (CARPE: Computational and Algorithmic Representation and Processing of Eye-Movements) can also be downloaded from the website. When using or referring to any of the work from DIEM, please reference the Cognitive Computation paper cited above.

Wonderful work in this area has already been conducted by Dan Levin (Vanderbilt), Gery d’Ydewalle (Leuven), Stephan Schwan (KMRC, Tübingen), and the grandfather of the recent revival in empirical cognitive film theory, Julian Hochberg. I am indebted to their pioneering work and excited about taking this research area forward.

Finally, I would like to thank David and Kristin for inviting me to describe some of my work on their wonderful blog. I have been an avid follower of their work for years and David has been a great supporter of my research.

DB PS 26 February: The response to Tim’s blog has been astonishing and gratifying. Tens of thousands of visitors have read his essay here, and his videos have been viewed over 700,000 times on sites across the Web. I’m very happy that so many non-psychologists–scholars, critics, and filmmakers–have found something of value here. The extended discussion on Jim Emerson’s scanners site, in which I participated a little, is especially worth reading. For more comments and replies from Tim and his team, go to Tim’s Continuity Boy blogpage and the DIEM team’s Vimeo page. At Continuity Boy, Tim will post more videos based on his group’s experimental efforts.

DB PS 18 October: Tim has posted a new, equally interesting experiment on tracking non-visible (!) movement on his blogsite.